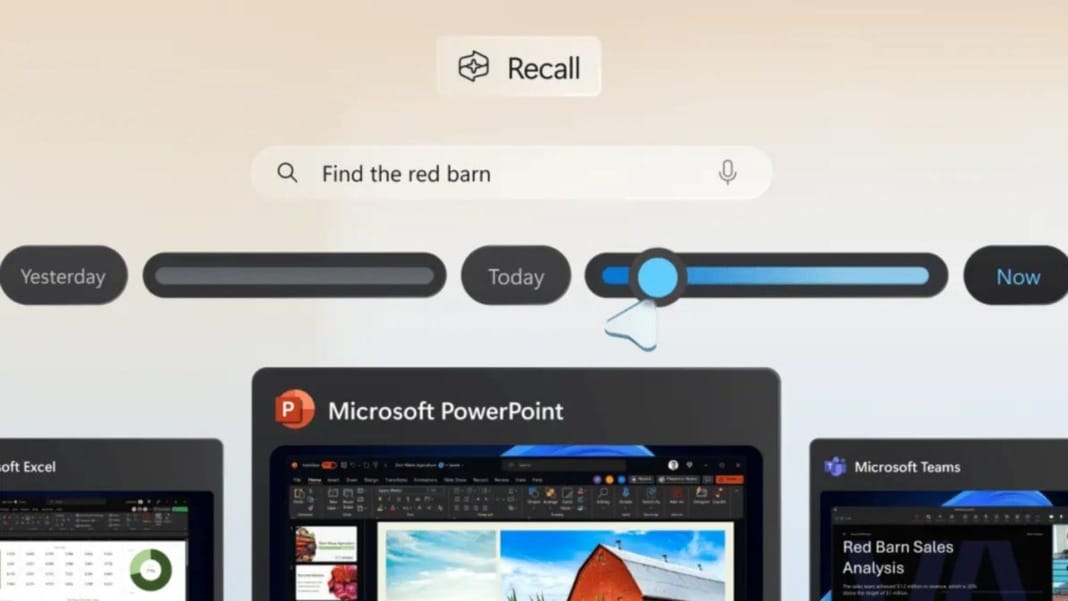

Microsoft has shared an update on the security and privacy protections in its latest AI tool, Recall. In a detailed blog post, the company outlined the measures to safeguard users’ data and prevent potential privacy issues. Key topics in the post include Recall’s security architecture and technical controls designed to ensure users remain in control of their data. Despite these assurances, it is important to note that while Recall is optional, it cannot be fully uninstalled from your device, as recently confirmed by Microsoft.

The blog post dives deep into the security challenges Recall faces. One of the main design principles outlined is that “the user is always in control.” This means that users can decide whether or not to enable Recall during the setup of their new Copilot+ PC. Microsoft emphasises that Recall will only run on devices that meet strict security requirements, which include features such as Trusted Platform Module (TPM) 2.0, System Guard Secure Launch, and Kernel DMA Protection. These hardware demands aim to boost the overall security of the system.

Recall’s user control features

The company highlighted the importance of keeping users responsible for Recall’s data access. During your device’s initial setup, you can choose to opt out of using Recall. If you choose not to activate it, Recall will remain off by default. Microsoft has also clarified that users can disable Recall via Windows settings, although it remains to be seen if this action will completely remove the tool from your system.

Should you decide to use Recall, you can filter certain apps or websites, preventing Recall from saving any data linked to them. Additionally, any information gathered while browsing in incognito mode will not be saved. You will also have full control over how long Recall retains your data and how much disc space it uses for storing snapshots. If you ever want to delete specific data, you can erase snapshots from a particular time range or remove all data related to a particular app or website.

Microsoft adds a system tray icon to indicate when Recall is collecting snapshots. You can pause the data collection at any time. For added security, accessing Recall content will require biometric verification, such as Windows Hello. Microsoft has confirmed that all sensitive information stored by Recall is encrypted and linked to your Windows Hello identity. This ensures that no other users on the same device can access your Recall data. It remains secure within a Virtualisation-based Security Enclave (VBS Enclave), with only certain portions of the data allowed to leave the VBS when authorised.

Encryption and sensitive data

Microsoft has also provided more details on Recall’s architecture. They confirmed that “processes outside the VBS Enclaves never directly receive access to snapshots or encryption keys.” Instead, external processes only get the data that has been authorised and released from the enclave. To further safeguard sensitive content, filters are in place to block Recall from saving certain types of information, such as passwords, credit card numbers, and ID details.

In another step towards bolstering security, Microsoft works with a third-party vendor to conduct a penetration test, ensuring that Recall meets high-security standards. This third-party verification aims to assure users that Recall is a secure tool, as Microsoft acknowledges the existing concerns surrounding its use.

Will the new measures be enough?

The introduction of these new security features reflects Microsoft’s awareness of the scepticism surrounding Recall. Since its launch, some users have voiced concerns about potential privacy issues, and a small group has even boycotted the AI tool entirely. Whether these new measures will alleviate these concerns remains to be seen. However, Microsoft is making strides to prove that its AI assistant can be trusted to handle sensitive data safely.