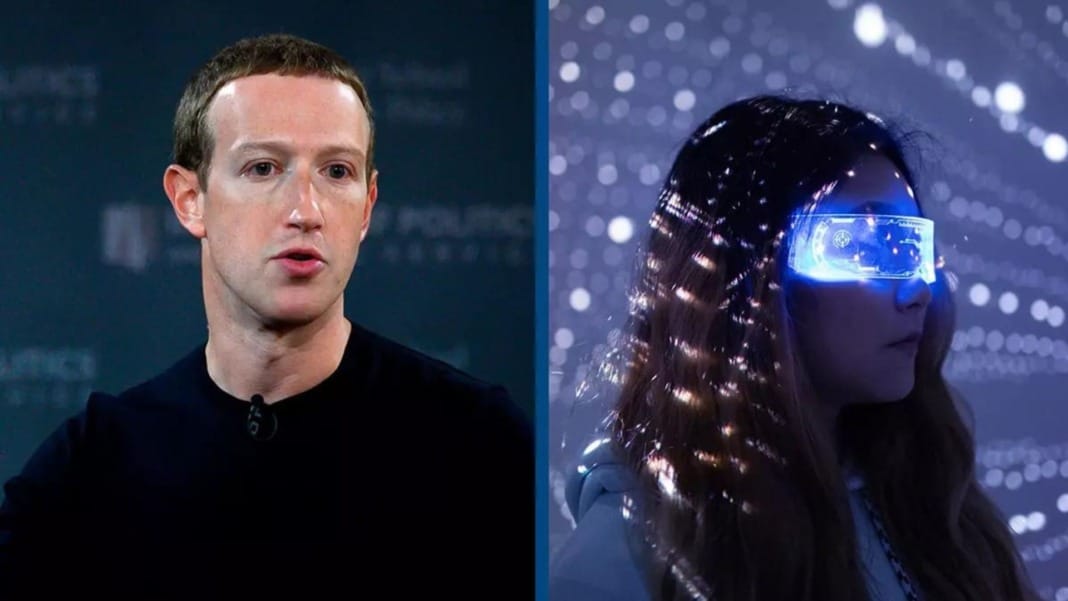

During the recent SIGGRAPH 2024 conference, Meta CEO Mark Zuckerberg shared an intriguing vision for the future of wearable technology. Speaking alongside NVIDIA CEO Jensen Huang, Zuckerberg focused on AI’s potential to revolutionise the way we interact with smart glasses. His thoughts on the future of advanced wearables caught significant attention during his hour-long presentation.

AI-powered glasses: The next big thing?

Zuckerberg outlined his expectations for the next generation of AI-powered Ray-Ban smart glasses. He confidently predicted that these glasses could become a widely used consumer product, potentially reaching hundreds of millions of users. Priced at around S$300, these advanced devices are expected to surpass the current capabilities of the Ray-Ban Meta Smart Glasses, offering a range of new AI-driven features.

The Meta CEO envisions a world where display-less AI glasses are a significant product and become as common as smartphones. These future glasses will likely incorporate advanced AI functionalities, making them a must-have for tech enthusiasts and everyday users.

A look at the current generation

To understand the potential of these future devices, it’s essential to look at the current generation of Ray-Ban Meta Smart Glasses. Launched in 2023, these glasses are the product of a collaboration between Meta and EssilorLuxottica, Ray-Ban’s parent company. They are equipped with a Qualcomm Snapdragon AR1 Gen 1 processor, allowing them to record video in 1080p resolution at 60 frames per second. One of the standout features of these glasses is the ability to livestream hands-free directly to Facebook and Instagram, a feature that opens up new possibilities for content creators.

Zuckerberg believes that the next iteration of these glasses will feature “super interactive AI.” Unlike current AI systems, which operate on a turn-by-turn basis where the user prompts the AI and receives a response, the future models will allow for natural, real-time interactions. The AI can process data directly from the user’s viewpoint through the onboard camera, enabling a more seamless and intuitive experience.

What to expect from future models

Zuckerberg also hinted at several potential functionalities for these future smart glasses. Among them are:

- Visual language understanding: The AI could interpret and describe the wearer’s surroundings in real time.

- Real-time translation: This feature would enable conversations across language barriers, making international communication easier.

- Advanced camera sensors: These could be used to capture high-quality photos and videos.

- Video calling: Integrated with popular apps like WhatsApp, this feature would bring the glasses a new level of connectivity.

While some models may include displays—specifically, holographic displays—Zuckerberg mentioned that the display-less versions would offer a more streamlined design, resembling standard eyewear. The focus is on creating glasses that look good and pack as much technology as possible into their sleek frames.

<iframe width="560" height="315" src="https://www.youtube.com/embed/w-cmMcMZoZ4?si=-YF-j4I6ypZjzElV" title="YouTube video player" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture; web-share" referrerpolicy="strict-origin-when-cross-origin" allowfullscreen></iframe>Although these advanced glasses are still some way from becoming a reality, Zuckerberg’s vision hints at a future where wearable tech could be as integral to our lives as smartphones are today. With the rapid development of AI capabilities, it won’t be long before we start hearing more about these AI-powered glasses and their potential to change how we interact with the world.