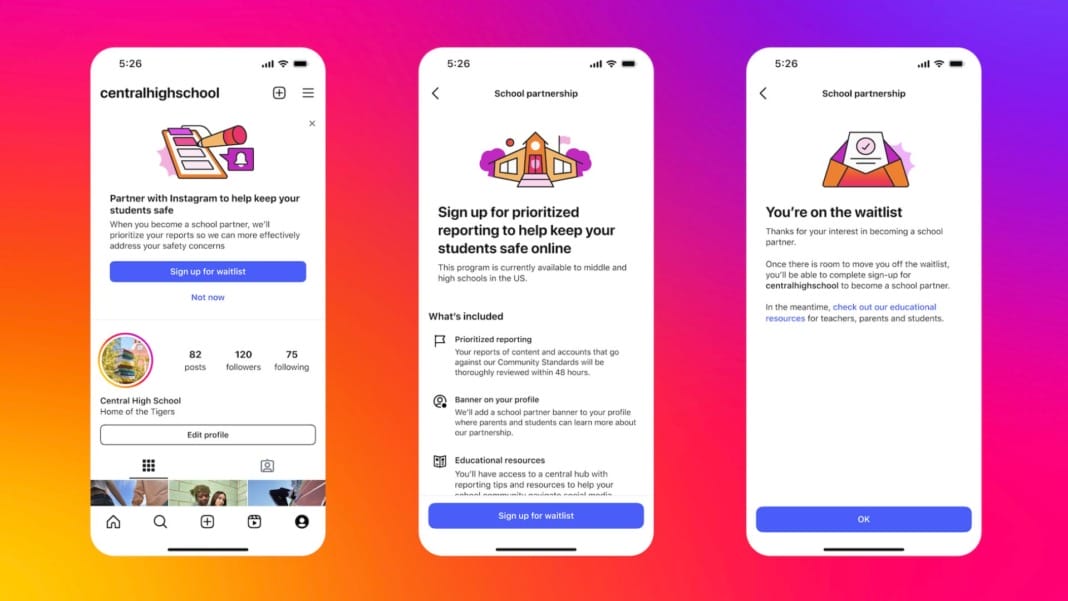

Instagram has launched a new partnership programme designed to help schools address online bullying and student safety more effectively. Announced on Tuesday, the initiative will allow verified school accounts in the U.S. to report harmful content more quickly.

Currently open to all middle and high schools nationwide, the programme allows educators to flag posts or student accounts that may violate Instagram’s guidelines. These reports will be prioritised for review, ensuring faster action on potential safety concerns. Schools will also be notified of any steps taken in response to their reports.

Instagram’s goal with this initiative is to provide educators with a direct way to address online bullying, harassment, and other safety issues affecting students. The company hopes to create a safer digital environment for young users by expediting the review process.

Schools can sign up for the programme

Participating schools will receive a “school partner” banner on their Instagram profiles, indicating their involvement in the programme. Instagram is also rolling out educational materials to support safe app usage, providing resources for teachers, parents, guardians, and students.

The programme has been in development for over a year and was tested in collaboration with 60 schools. Instagram also refined the initiative with the International Society for Technology in Education (ISTE) and the Association for Supervision and Curriculum Development (ASCD).

Schools that have not yet joined can be added to a waitlist, allowing them to participate in the future.

This comes amid growing calls for tighter social media regulations

Instagram’s latest move comes as U.S. lawmakers push for stricter social media platform regulations, particularly regarding children’s online safety. The Kids Off Social Media Act (KOSMA), which seeks to ban social media access for children under 13, has gained momentum. In February, the Senate Committee on Commerce approved the bill, and two related pieces of legislation—the Kids Online Safety Act (KOSA) and the Children and Teens’ Online Privacy Protection Act (COPPA)—have already passed in the U.S. Senate.

In response to growing concerns about online safety, Instagram has introduced other measures to protect young users. For example, accounts created by users under 16 are set to private by default, limiting exposure to strangers. The platform has also added parental controls and restrictions on messaging to enhance child safety.

With its new school partnership programme, Instagram aims to reinforce its commitment to protecting students and making the platform a safer space for young users.