As we embark on a journey into the world of artificial intelligence (AI), it’s crucial to remember that AI is not a mystical entity but a creation of human ingenuity. It’s a technology that augments human abilities, aiding in tasks that range from the mundane to the complex. But even as we marvel at what AI can do, it’s essential to understand its limitations. Our perspective on AI should be balanced – not one borne out of fear or uninformed awe.

This article aims to shed light on the practical capabilities and boundaries of AI. It’s not a tale of robots taking over the world but rather an exploration of how AI technologies shape our world, the issues they solve, the challenges they pose and, notably, the areas where they cannot operate effectively.

A glimpse into the world of AI capabilities

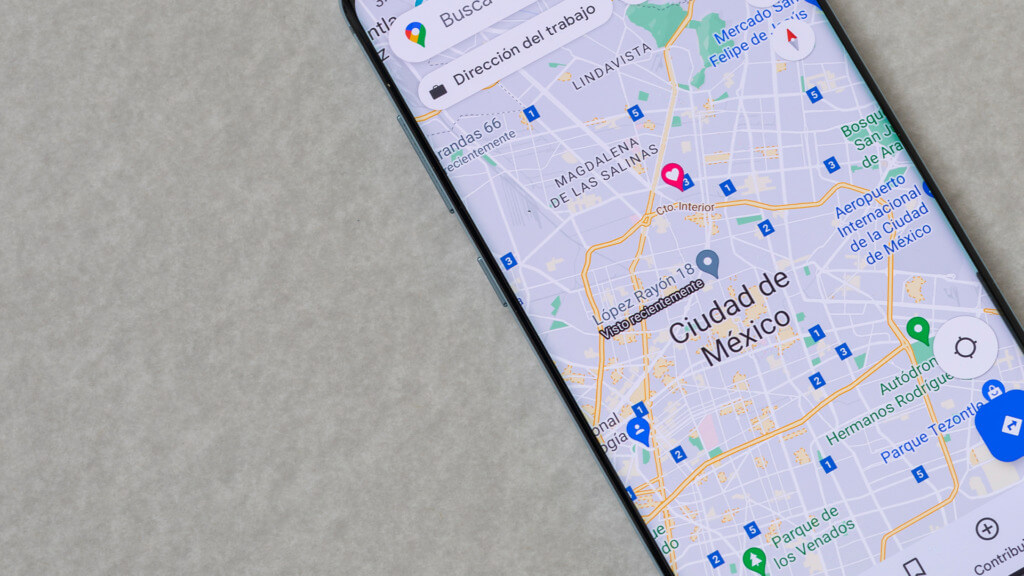

You use AI more often than you might realise. Whenever you ask Siri for the weather, use Google Maps for directions, or see recommendations on your Netflix feed, you interact with AI. These technologies use algorithms to learn from data, enabling them to make predictions, recommendations or decisions that can help you in your daily life.

AI algorithms are transforming how doctors diagnose and treat diseases in the healthcare sector. From predicting the likelihood of heart disease to aiding in the detection of cancerous tumours in imaging data, AI is augmenting the capabilities of healthcare professionals and improving patient outcomes.

AI has also made significant strides in natural language processing (NLP), the technology behind voice assistants like Alexa and Google Home. NLP enables computers to understand, process, and generate human language, allowing you to converse with your devices as with another human.

Understanding the limitations of AI

Understanding the limitations of AI is crucial for developing realistic expectations and avoiding misconceptions. While AI has made remarkable advancements, it’s essential to recognise the boundaries within which it operates.

One significant limitation of AI lies in the quality and biases in the data it learns from. AI algorithms rely on vast data to train and make accurate predictions or decisions. However, if the training data is biased or incomplete, it can lead to biased outcomes. For example, if an AI system is trained on data that predominantly represents one racial or gender group, it may result in biased decisions that perpetuate discrimination. Addressing data biases and ensuring diversity and representativeness in training sets are critical to developing fair and ethical AI systems.

Another limitation of AI is its inability to understand context beyond its training. AI algorithms excel at pattern recognition and making predictions based on learned patterns. However, they need a nuanced understanding of human language and the contextual cues humans possess. This limitation becomes apparent when interacting with voice assistants or chatbots. While they may accurately respond to straightforward queries, they often struggle with complex or ambiguous requests, requiring precise phrasing or predefined commands. Enhancing AI’s contextual understanding is an ongoing research challenge that aims to improve its ability to comprehend human intentions and respond appropriately.

Emulating human emotions and creativity remains an elusive feat for AI. Although AI algorithms can generate artwork, compose music, or write stories, they lack the emotional depth and subjective experience humans bring to these creative endeavours. AI generates output based on learned patterns and statistical analysis, needing a more proper understanding and emotional connection to the art it creates. The ability to capture and replicate human emotions and creativity is an area of active exploration in AI research but is far from achieving human-like expression levels.

By acknowledging these limitations, we can set realistic expectations for AI and avoid overestimating its capabilities. It is crucial to understand that AI is a tool created by humans, and its performance is inherently limited by the data it learns from and the algorithms designed to process that data. However, rather than seeing these limitations as roadblocks, we can view them as opportunities for improvement and innovation.

Efforts are underway to address these limitations and push the boundaries of AI. Researchers are actively developing more robust techniques to mitigate bias in AI systems, making them more transparent, interpretable, and accountable. Advancements in natural language processing aim to improve AI’s contextual understanding, enabling more natural and flexible interactions with humans. Moreover, ongoing research in affective computing explores ways to imbue AI with emotional intelligence, allowing it to understand better and respond to human emotions.

Setting the stage for the future

As we move forward, it’s important to remember that AI is a tool that serves us, not an entity that controls us. It’s capable of great things, but only within the boundaries, we set for it.

The future of AI is not just about pushing the boundaries of what it can do but also about addressing its limitations. This includes tackling ethical issues like data bias, improving AI’s ability to understand context, and even exploring whether AI can be creative or emotional in a way that’s meaningful to humans.

And finally, “Building bridges, not barriers” would be an apt phrase to summarise our approach to the future of AI. We should build bridges between AI’s capabilities and our needs, between different disciplines to create more robust and ethical AI systems, and between other communities to ensure all AI’s benefits are shared.

The future of AI holds great promise, but it also demands responsibility. We have the power to shape this technology, to decide its role in our lives and society. Let’s use this power wisely to harness AI’s potential, respect its limitations, and address the challenges it poses. After all, the goal isn’t to create an AI that replaces humans but one that enhances our abilities and enriches our lives.